News & Updates

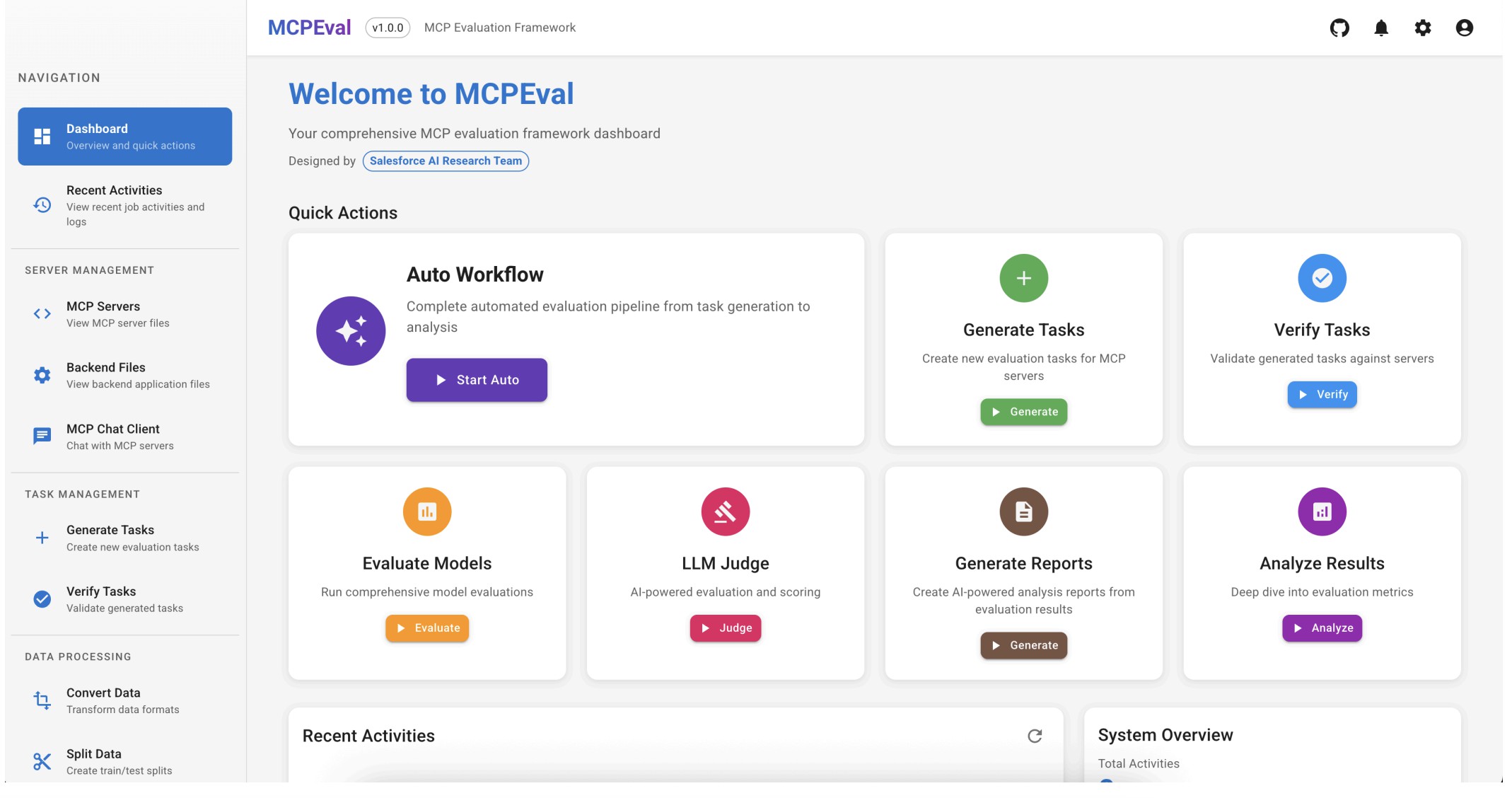

- [2025/05] Our APIGen-MT pipeline and

xLAM-2

models have been highlighted in major tech media including VentureBeat,

CIO,

and ZDNET!

- [2025/04] Our new paper on the

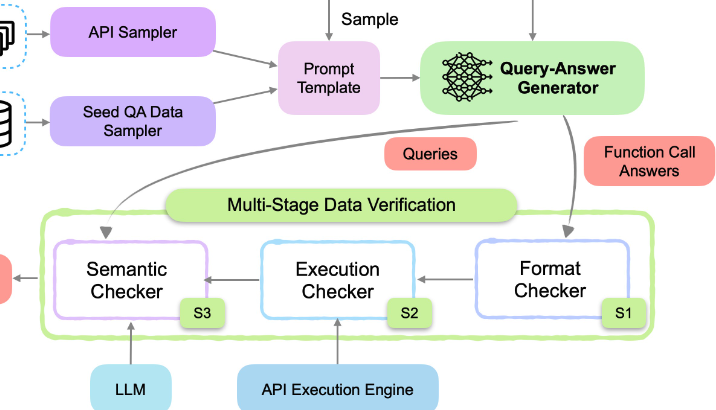

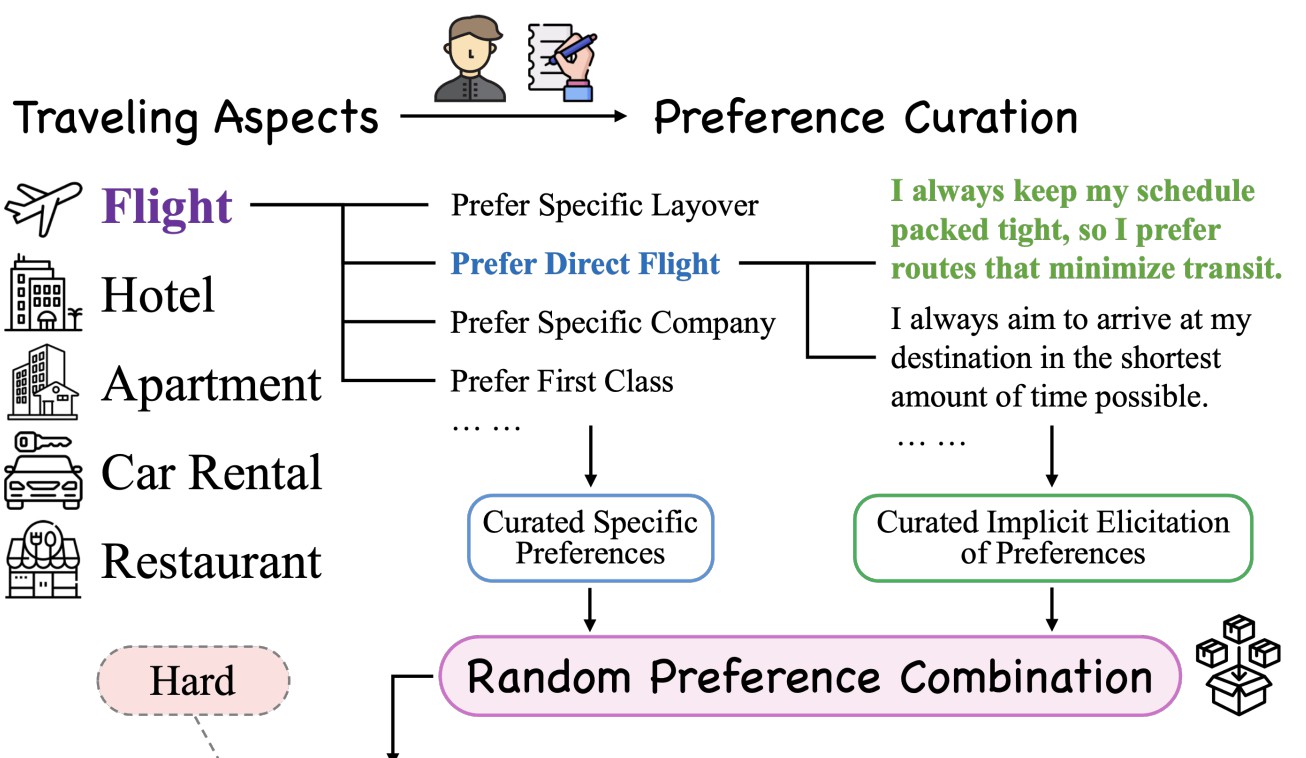

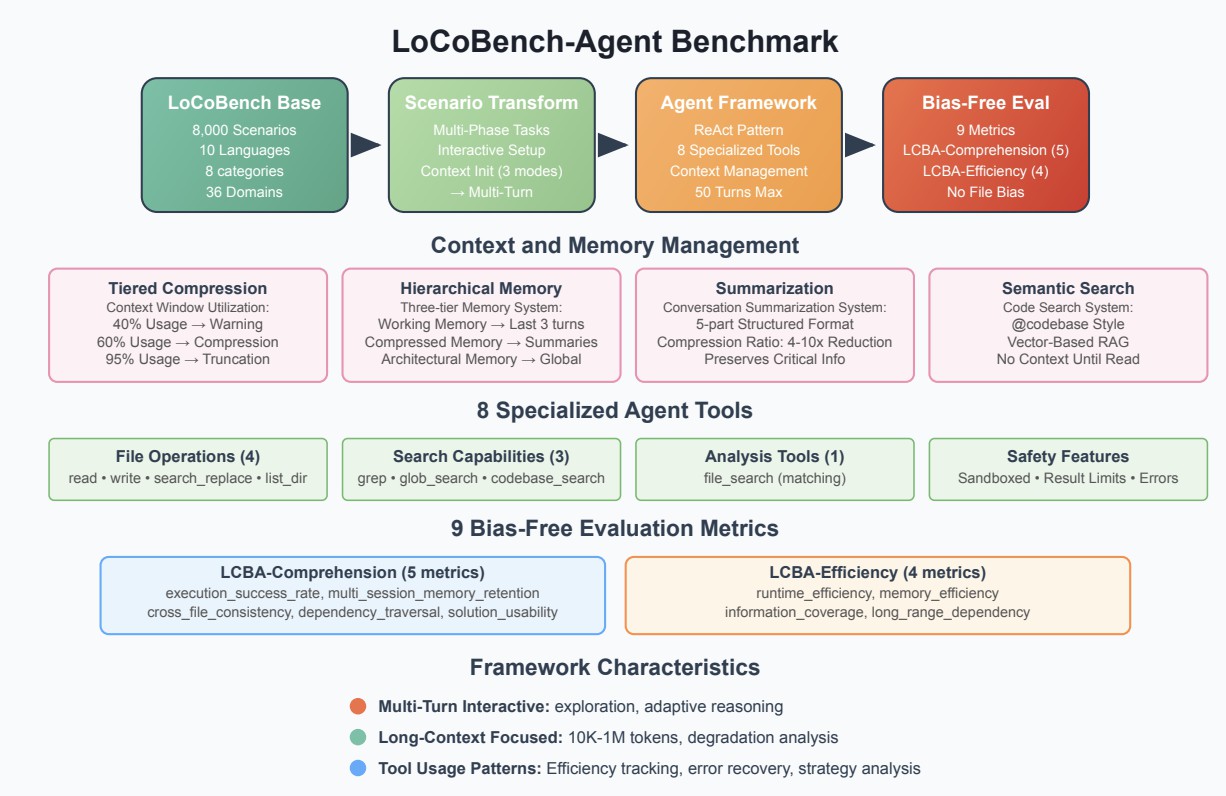

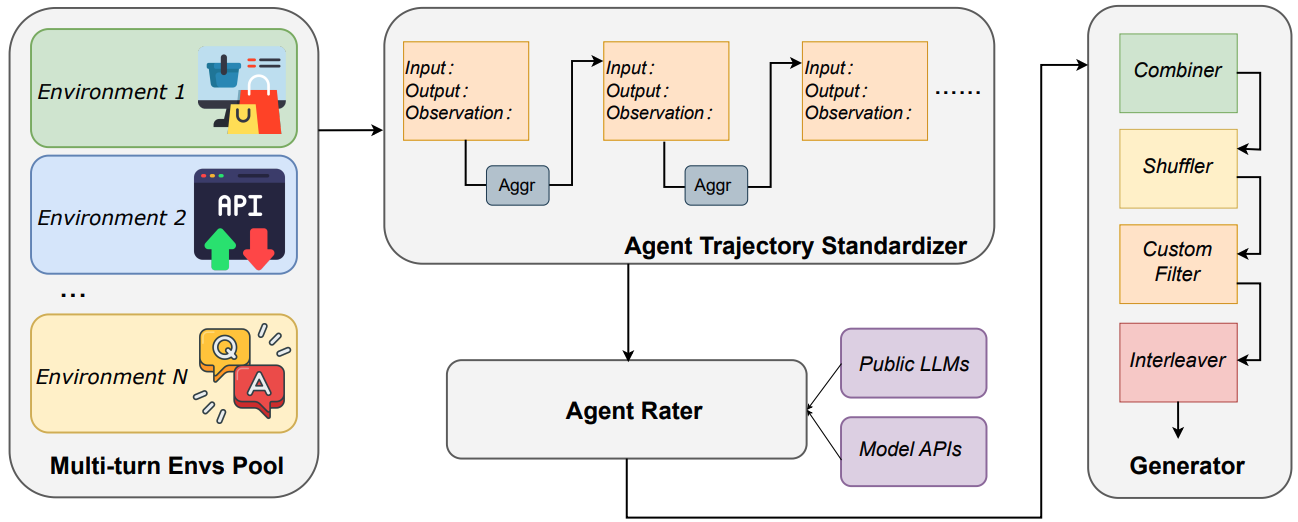

agentic data synthesis that powers our xLAM-2 models is released! Check out APIGen-MT for more details.

- [2025/04] Our xLAM-2

models just got an upgrade with multi-turn support! Our 70B model ranks

#1 and 32B

model ranks #2 on the BFCL function-calling

leaderboard—beating GPT-4o, Gemini, Qwen & more. Even our smaller models like xLAM-8B-r lands at

#4 , ahead of GPT-4o.

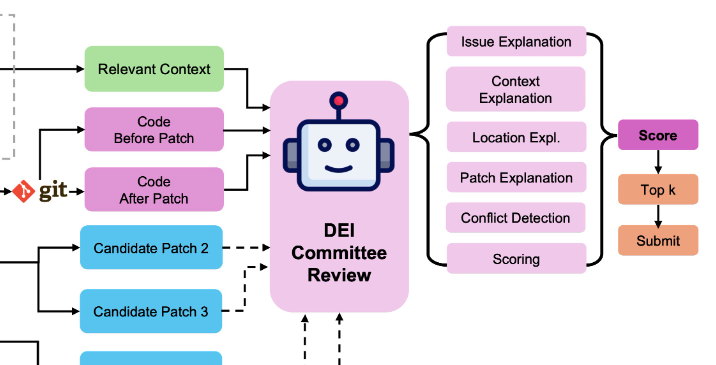

- [2025/01] Our SWE-agent paper: Diversity Empowers Intelligence (DEI), is

accepted by ICLR 2025!

- [2024/10] Our APIGen paper is accepted by NeurIPS 2024!

- [2024/09] Check out our xLAM blog post and Technical Report Paper for insights into our

Salesforce's Large Action Models.

- [2024/08] We are thrilled to announce the release of the entire xLAM family, our

suite of Large Action Models! From the "tiny giant" 1B model to industrial powerhouses 8x22B

model. These models have achieved impressive rankings, placing #1 and #6 on the Berkeley

Function-Calling Leaderboard. Explore our Hugging

Face collection for more details.

- [2024/07] We are excited to announce the release of our two function-calling

models: xLAM-1b-fc-r and xLAM-7b-fc-r. These models have

achieved impressive rankings, placing #3 and #25 on the Berkeley

Function-Calling Leaderboard, outperforming many significantly larger models.

- [2024/06] Check our latest work APIGen, the best open-sourced models for

function calling. Our dataset is

currently among the Top-3 trending datasets on HuggingFace as of July 4, 2024. See also the Twitter by Salesforce CEO, VentureBeat

and 新智元.

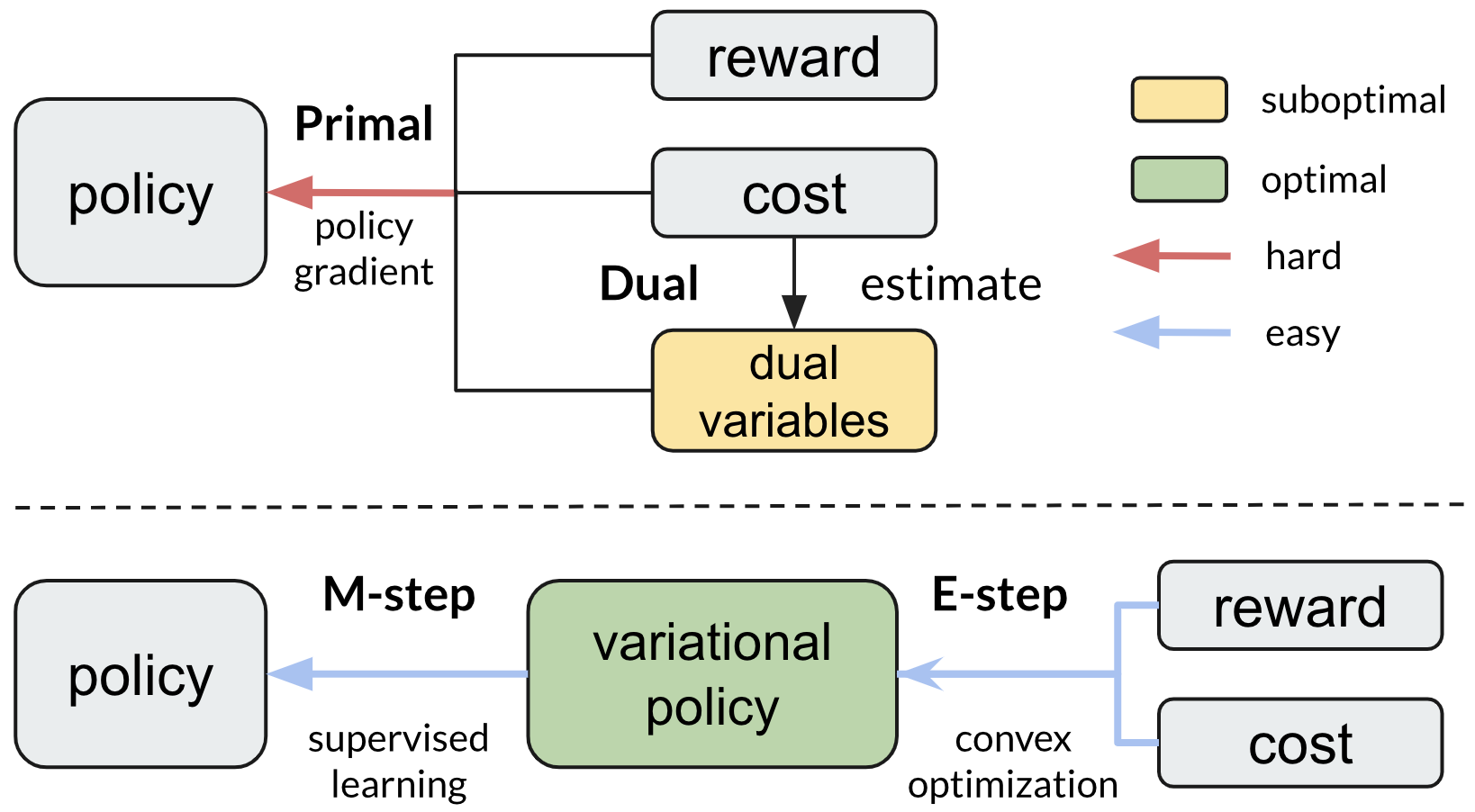

- [2024/05] Our paper for efficient and safe RL is accepted by ICML

2024!

- [2024/04] Our RL dataset and benchmark paper is accepted by DMLR

Journal! Checkout the website for

details!

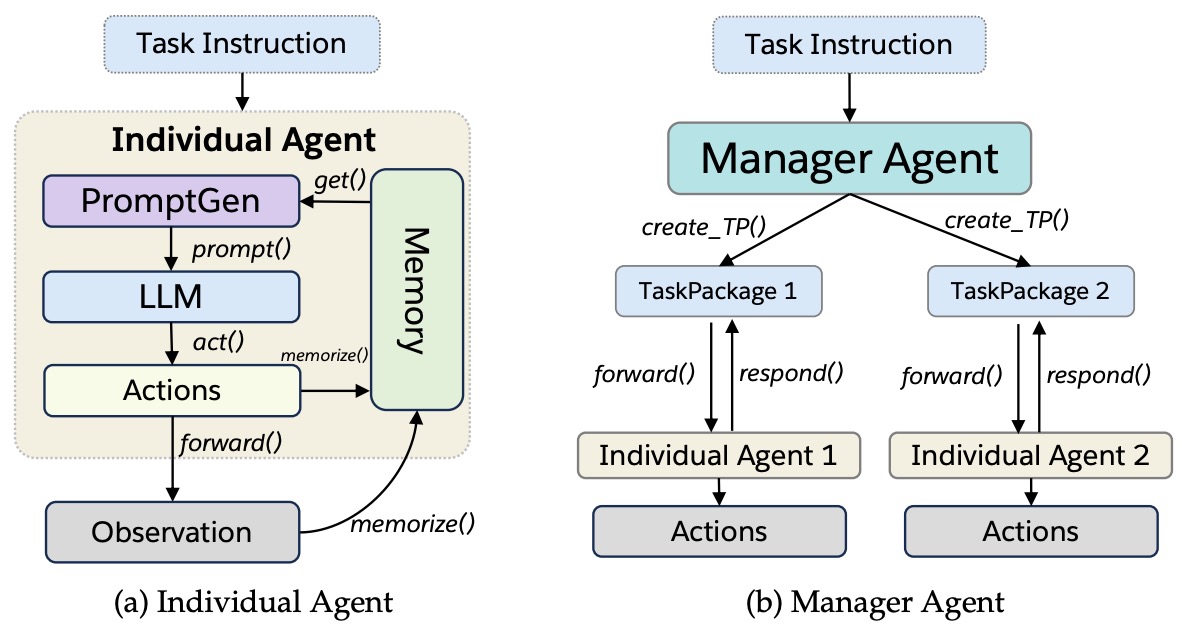

- [2024/02] We release our multi-LLM-Agent framework AgentLite library and paper!

- [2024/01] I joined Salesforce AI Research as a Research Scientist! Looking

forward to working with the amazing team members on LLM Agent!

- [2024/01] Our two papers, one about efficient foundation model adaptation, and

one about offline RL, are accepted by ICLR 2024!

- [2024/01] Our paper about

robustness certification is accepted by AISTATS 2024!

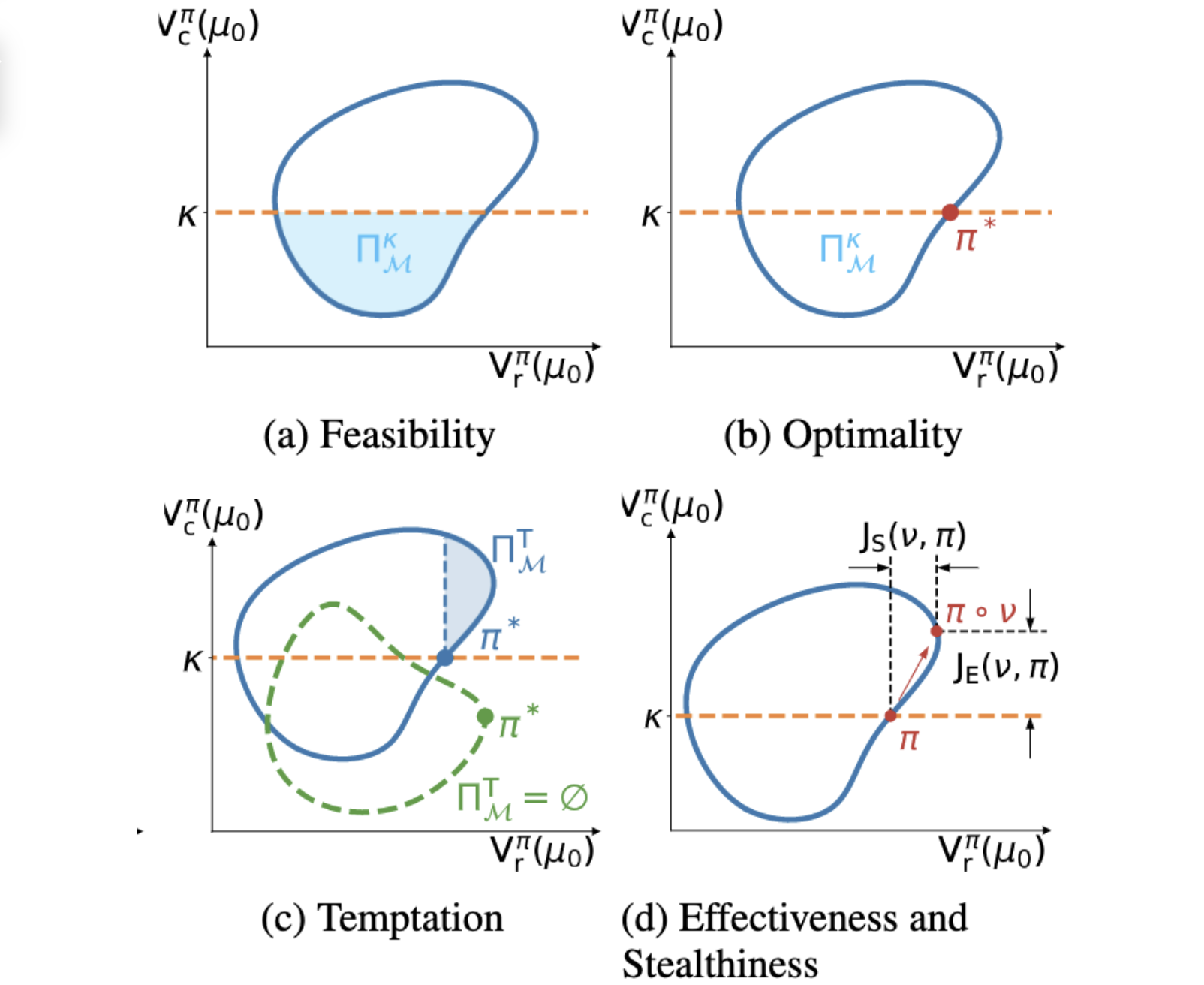

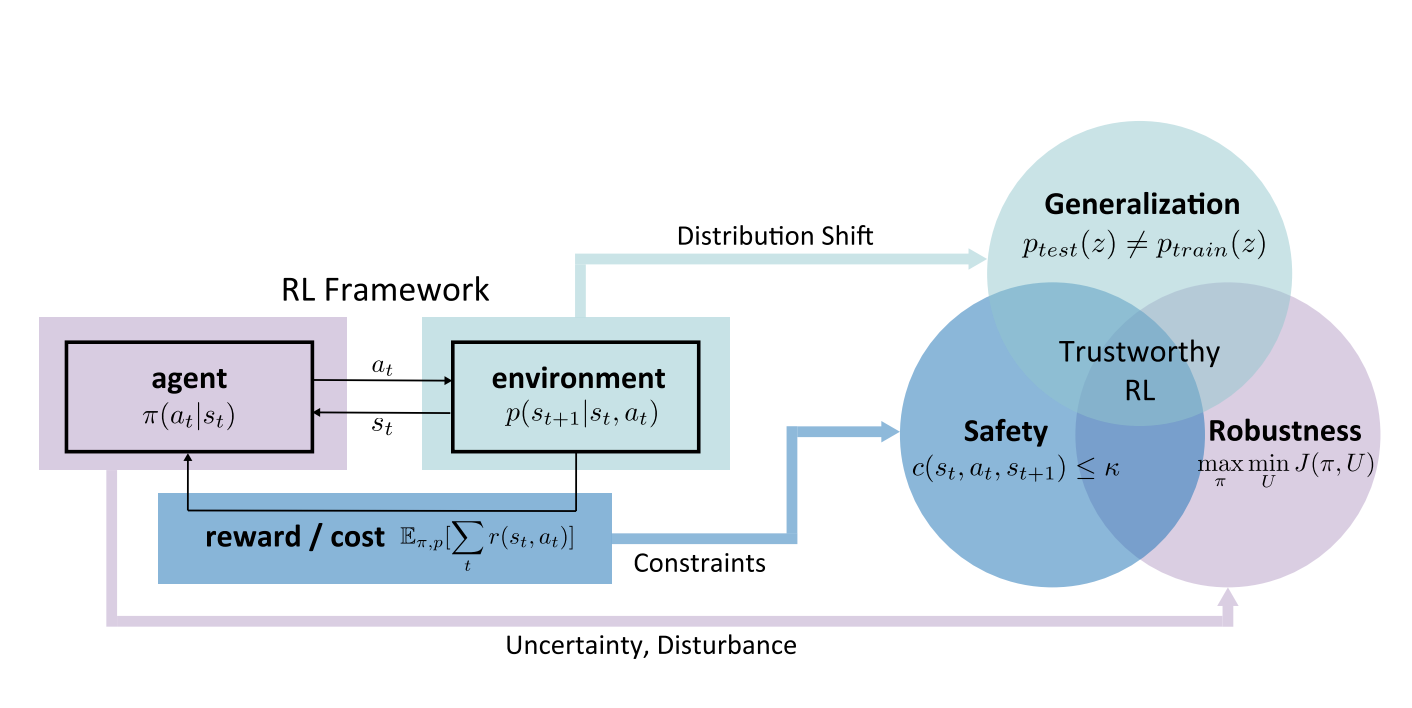

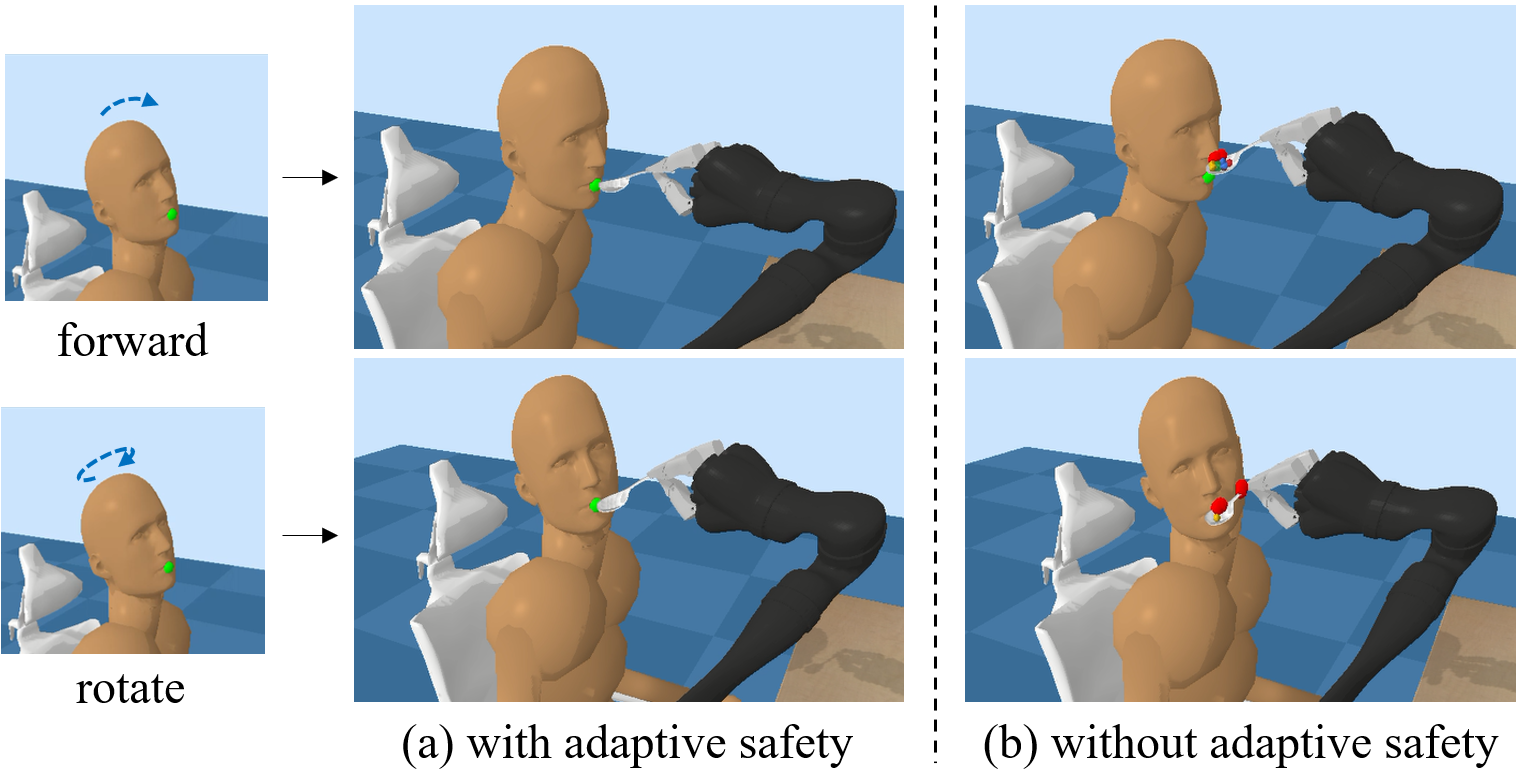

- [2023/09] Our two papers for safe RL, one about versatile policy learning, and one about inverse

constraint learning, are accepted by NeurIPS 2023!

- [2023/06] Our comprehensive datasets, benchmarks, and algorithms for offline safe learning are

released! Checkout our website for details!

- [2023/05] A fast safe reinforcement learning framework is released! Checkout our GitHub repo for details!

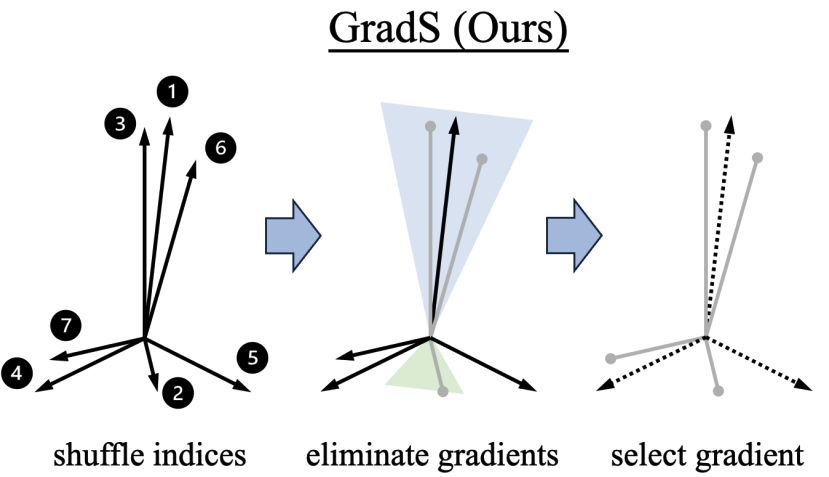

- [2023/04] Our two papers for safe RL, one about robustness and one about offline learning, are

accepted by ICML 2023!

- [2023/01] Our paper about observational robustness in safe RL is accepted by ICLR

2023!

- [2022/12] Our paper about robustness in safe RL win the AI Risk Analysis Award

at the 2022 NeurIPS ML Safety Workshop!

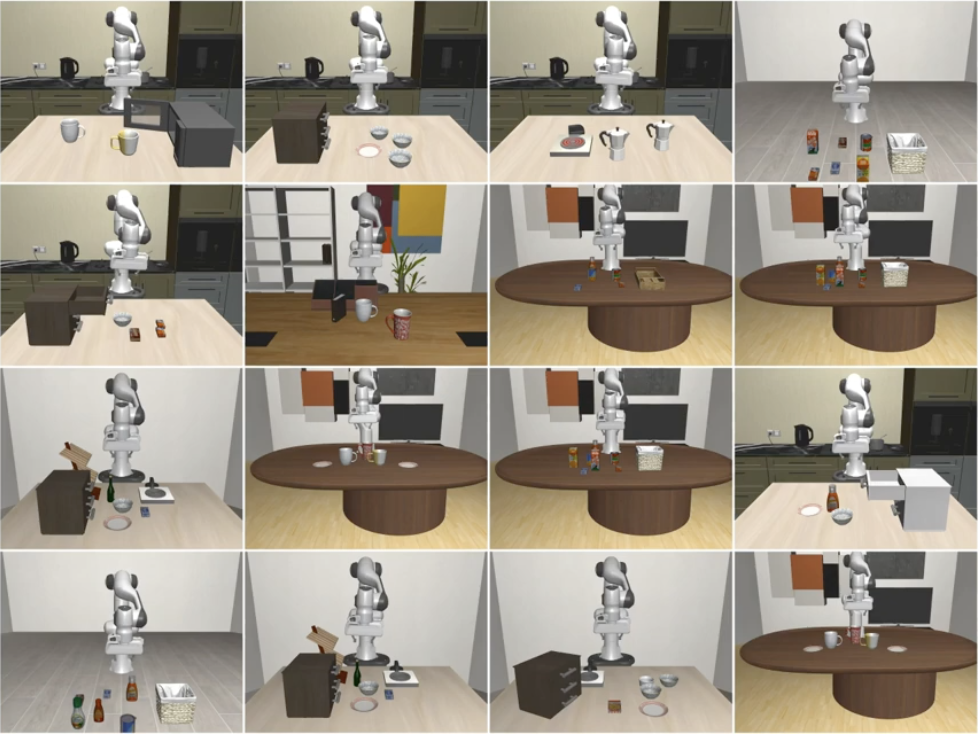

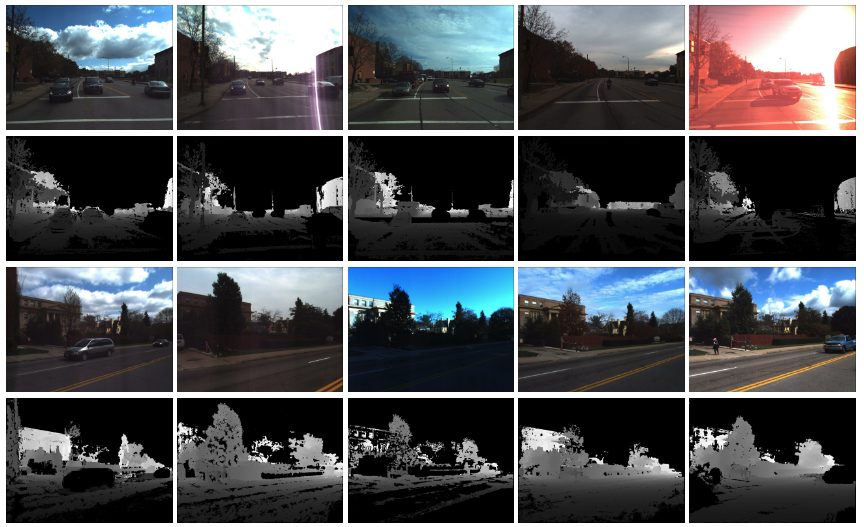

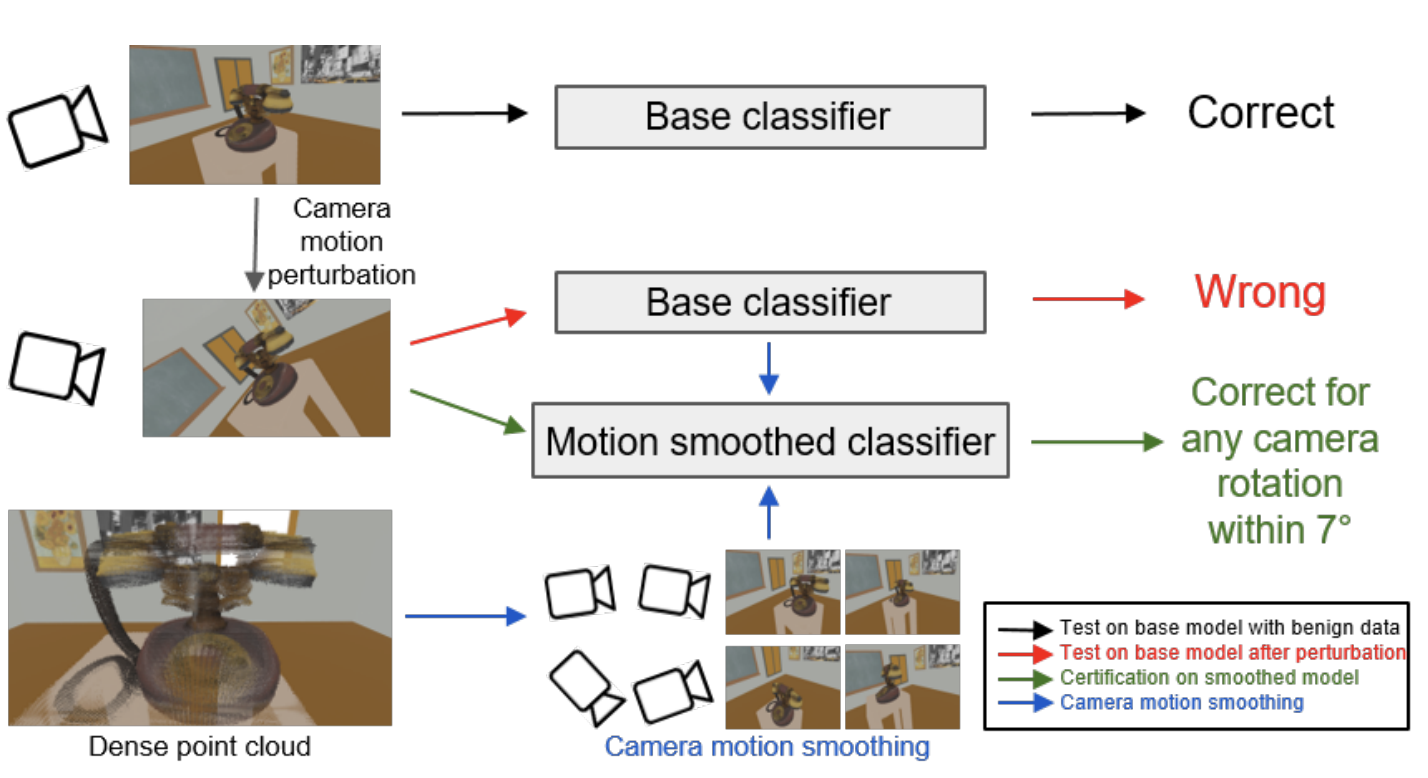

- [2022/09] Our work about robustness certification in visual perception is accepted by

CoRL 2022.

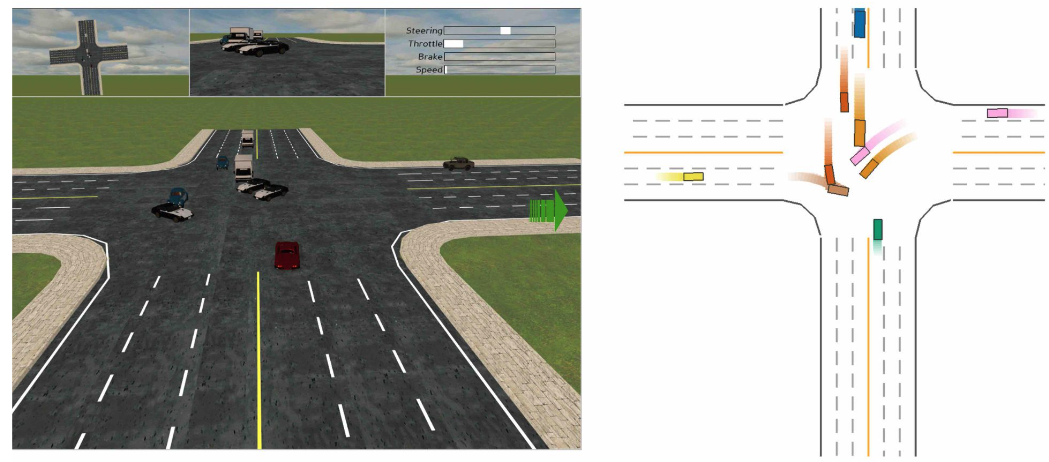

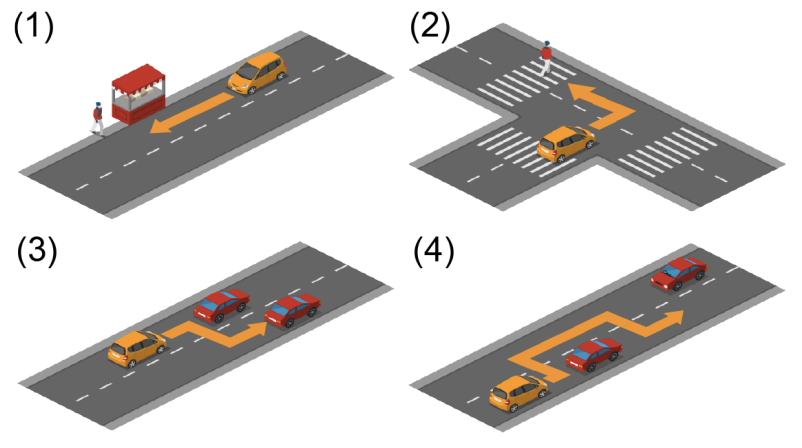

- [2022/09] Our work about safety evaluation for self-driving vehicles is accepted by

NeurIPS 2022.

- [2022/07] Our paper about robustness in safe RL win the best paper runner-up in

the SL4AD Workshop

at ICML 2022!

- [2022/07] I am glad to present my work about safe RL at Google DeepMind robotics team.

- [2022/05] Our paper about variational inference approach for off-policy safe RL is accepted by

ICML 2022!

- [2022/05] I give a talk about recent advances in safe RL at Prof. Fei Fang's lab.

- [2022/04] Our work about safe learning for delivery robot is featured on the front page of CMU

news!

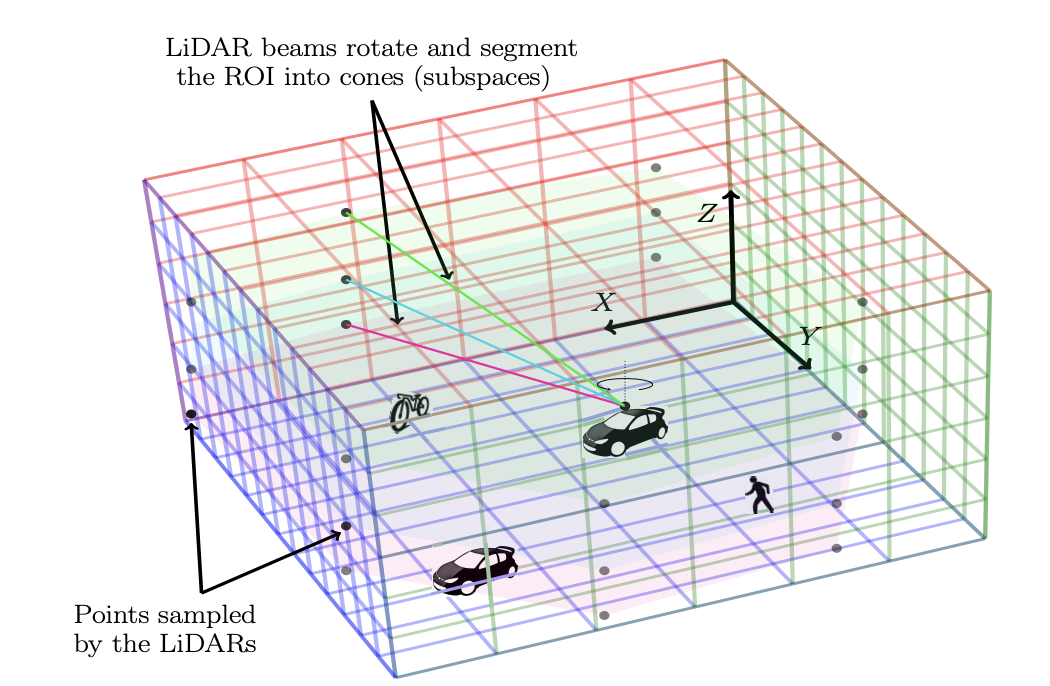

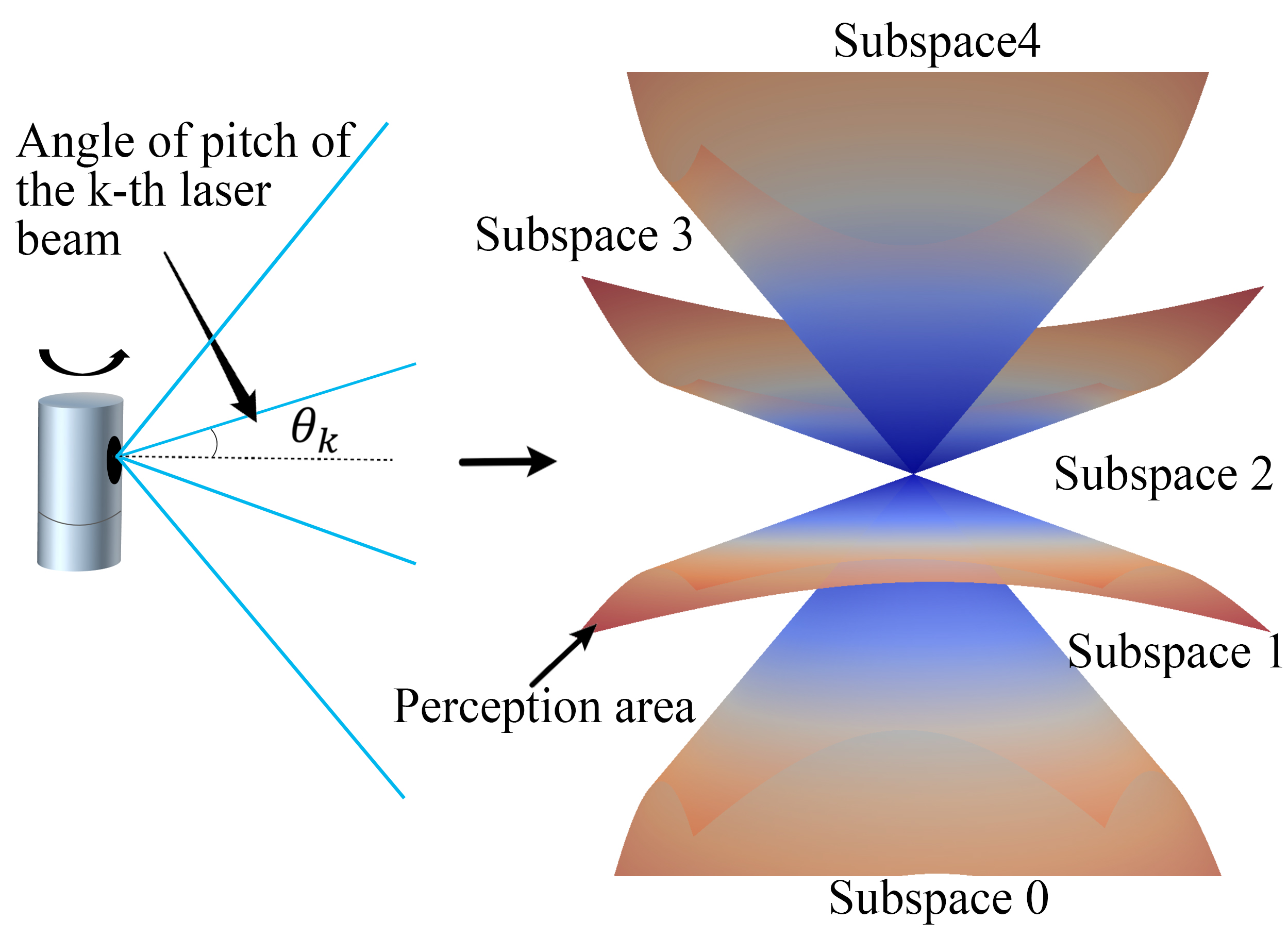

- [2022/03] Our paper about LiDAR sensing in autonomous vehicle is accepted by CVPR

2022!

- [2021/11] The autonomous delivery robot that we have built for one year is featured by CMU

Engineering.

- [2021/07] We win the Hackathon

during my intern at Nuro! Really enjoyed to solve challenging real-world problems for

self-driving.

Research Interests

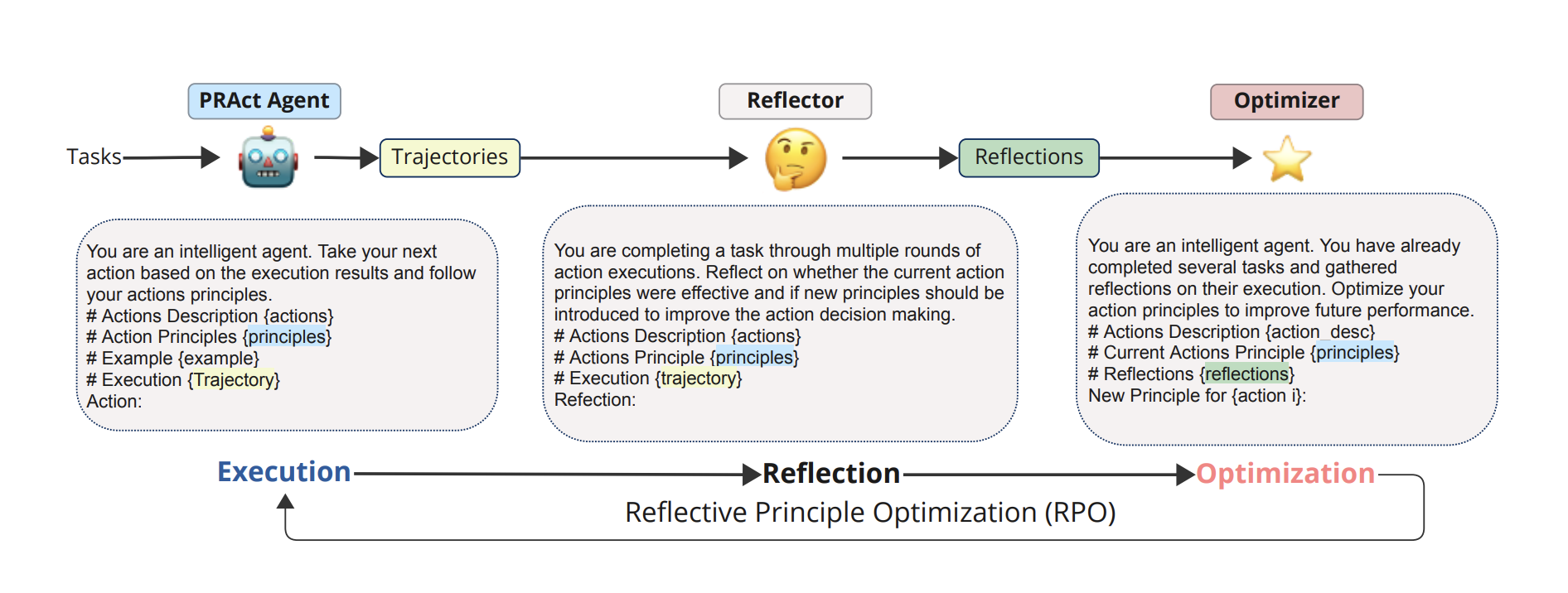

My long-term ambition is to develop AI agents capable of achieving and surpassing human-level

performance in various daily tasks, ultimately freeing humans from repetitive work and enhancing

productivity. Beyond the commonly recognized capabilities like reasoning and planning, I believe

that continual self-evolution (in terms of training) and

self-reflection (during deployment) are also essential traits of truly intelligent

agents. This aligns with the core principles of reinforcement learning (RL), which

I view as a guiding philosophical framework for thinking and studying AI agents.

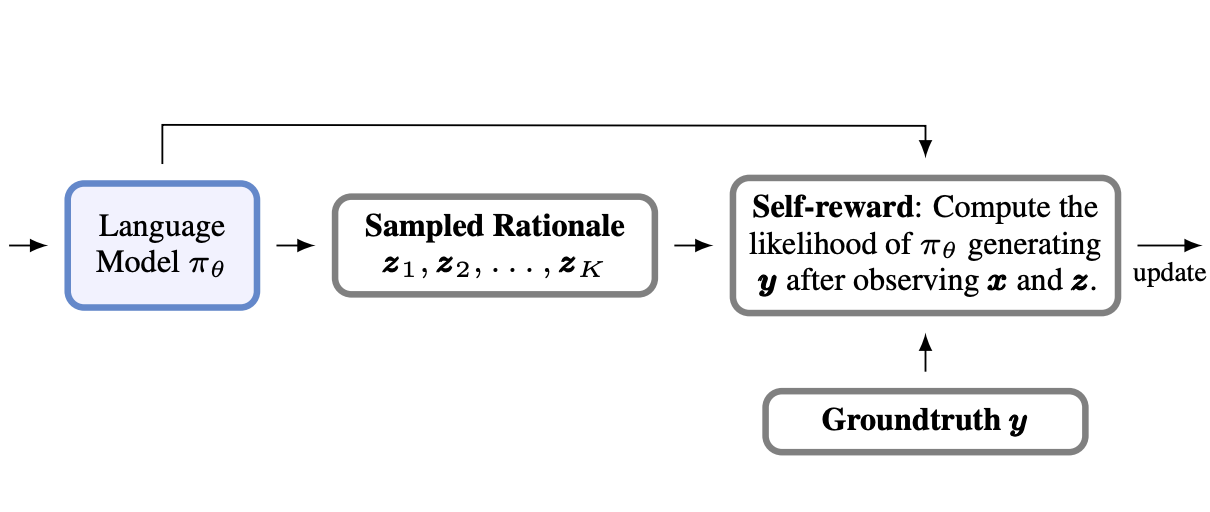

My research aims to apply the foundational principles—not just the methods—of RL to large language

model (LLM)-based agents, contributing to the promising future of AI Agent systems that that

evolve, learn, and interact in ways that

complement and enhance human capabilities. Currently, I am developing scalable approaches, such as

utilizing synthetic data, to improve models' agentic abilities, and leveraging environmental

feedback to better enable self-learning and reflection, as exemplified like the Software Engineering

Agent.